ExplainWhy?

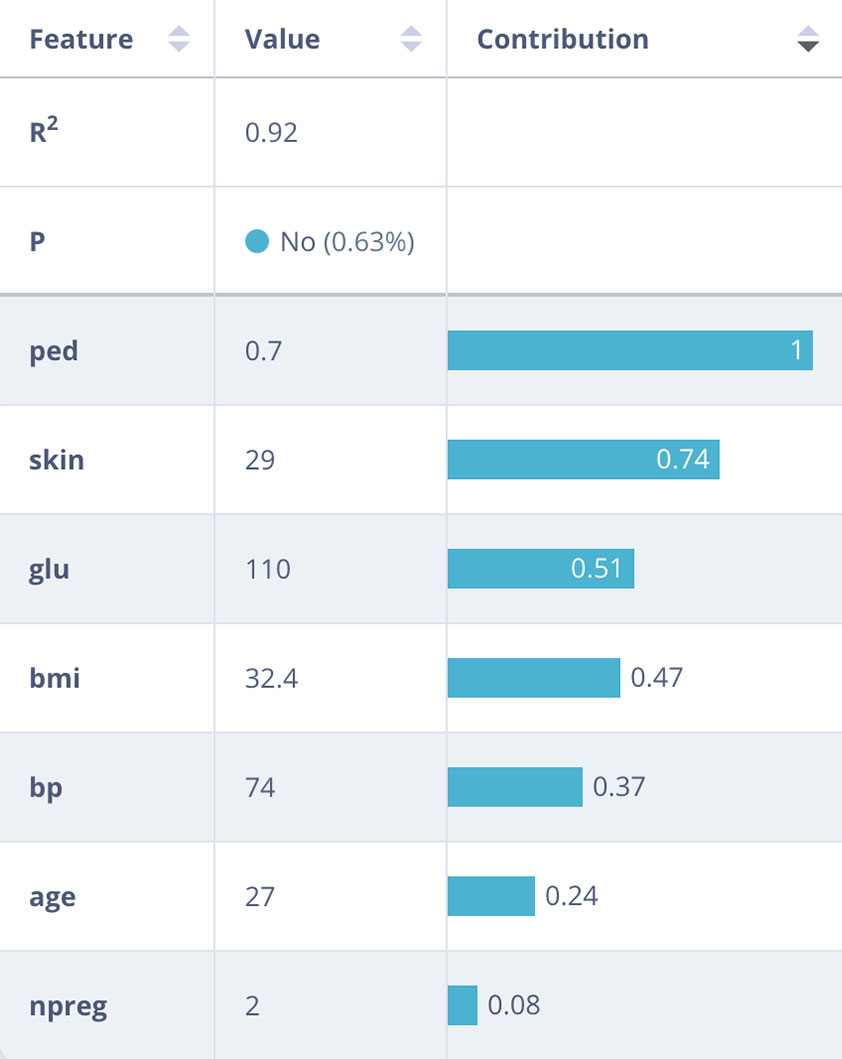

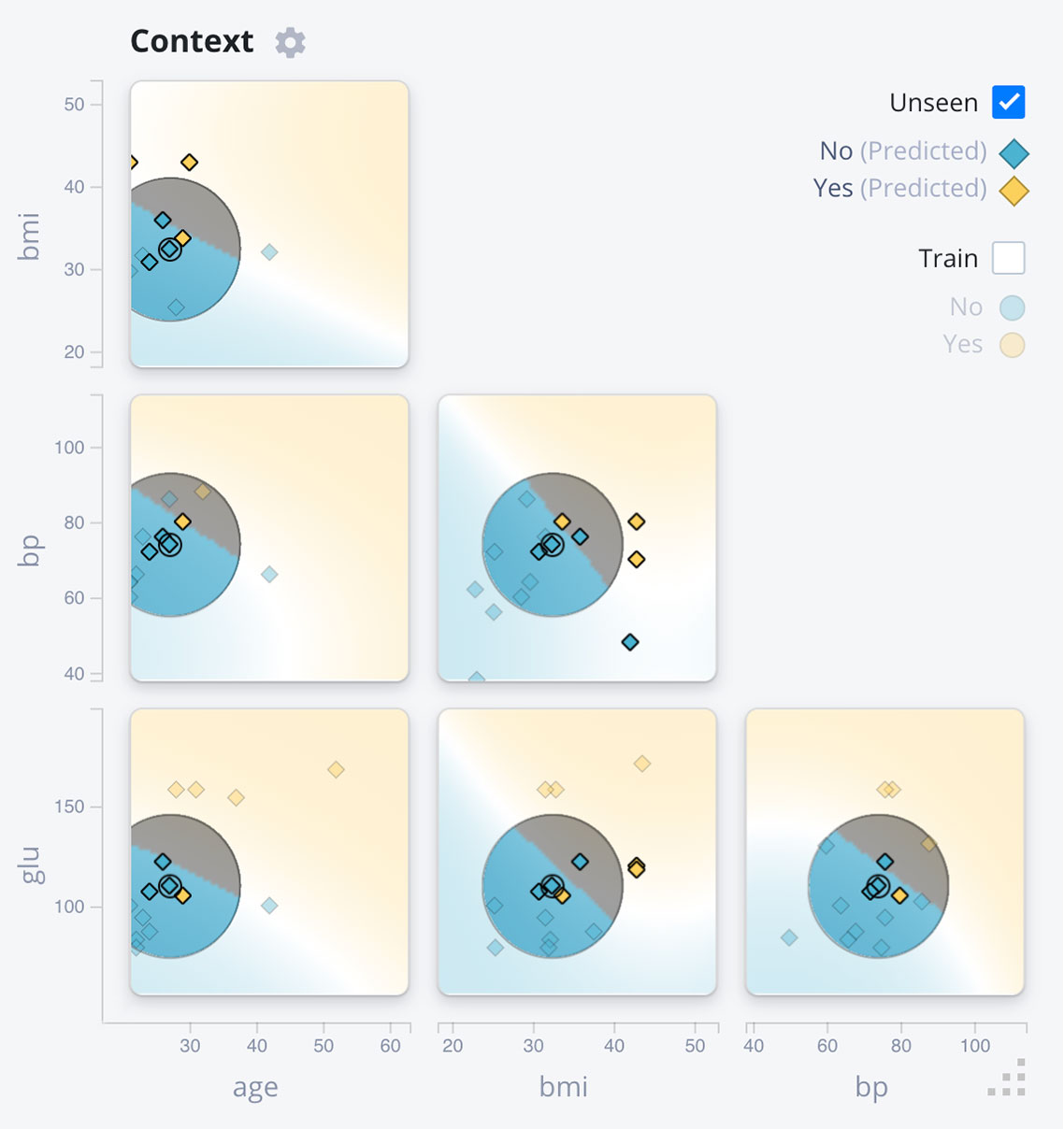

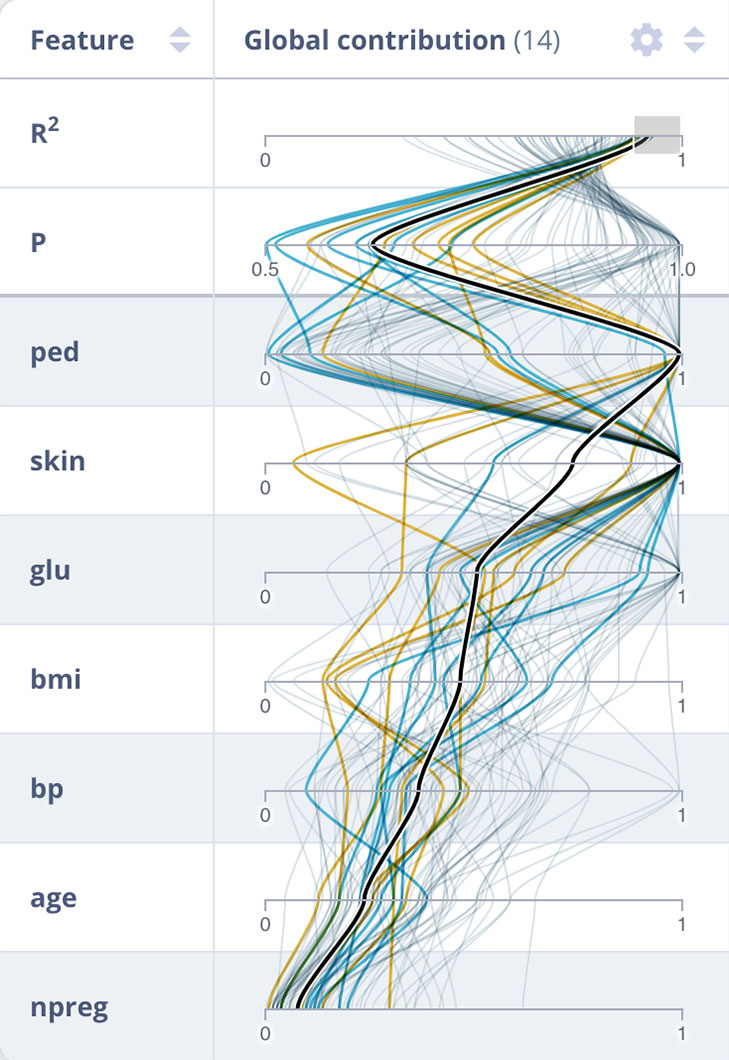

Modern machine learning models are usually applied in a black-box manner: only the input (data) and

output (predictions) are considered, the inner workings are considered too complex to understand.

ExplainHow?

To explain a complex machine learning model, we generate a local approximation (or surrogate model) that can be easily explained.